Early this week, Microsoft announced a project code named Velocity. Velocity is a distributed in-memory object caching system in the vein of memcached (aka a Distributed Hash Table). If you read any modern stories of the architectures of popular Web sites today such as the recently published overview of the LinkedIn social networking site's Web architecture, you will notice a heavy usage of in-memory caching to improve performance. Popular web sites built on the LAMP platform such as Slashdot, Facebook, Digg, Flickr and Wikipedia have all been using memcached to take advantage of in-memory storage to improve performance for years. It is good to see Microsoft step up with a similar technology for Web sites built on the WISC platform.

Like memcached, you can think of Velocity as a giant hash table that can run on multiple servers which automatically handles maintaining the balance of objects hashed to each server and transparently fetches/removes objects from over the network if they aren't on the same machine that is accessing an object in the hash table. In addition, you can add and remove servers from the cluster and the cache automatically rebalances itself.

The Velocity Logical Model

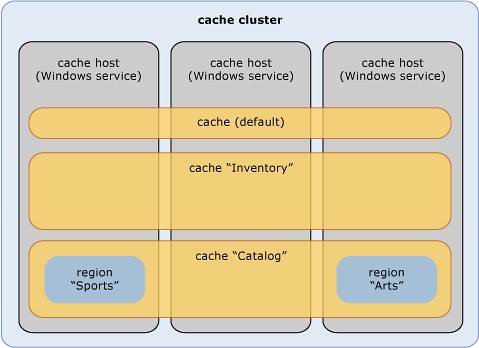

The following diagram taken from the Velocity documentation is helpful in discussing its logical model in detail

In the above diagram, each cache host is a server that participates in the cache cluster. Your application can have multiple named caches (e.g. "Inventory", "Product Catalog", etc) each of which can be configured separately. In addition, each named cache can have one or more named region. For example, the Sports region of your Product Catalog or the Arts region of your product catalog. Below is some sample code that shows putting and getting objects in and out of a named cache.

CacheFactory CacheCluster1 = new CacheFactory();

Cache inventoryCache = CacheCluster1.GetCache("Inventory");

Sneaker s = (Sneaker)inventoryCache.Get("NikeAirForce1");

s.price = s.price * 0.8; //20% discount

inventoryCache.Put("NikeAirForce1", s);

Velocity ships with the ability to search for objects by tag but it is limited to objects within a specific region. So you can fetch all objects tagged "Nike" or "sneakers" from the sports region of your product catalog. As shown in the above diagram, a limitation of regions is that all items in a region must be on the same physical server. Below is an example of what the code for interacting with regions looks like

CacheFactory CacheCluster1 = new CacheFactory();

Cache catalog= CacheCluster1.GetCache("Catalog");

List <KeyValuePair<string, object>> sneakers = catalog.GetByTag("Sports", "sneakers");

foreach (var kvp in sneakers)

{

Sneaker s = kvp.Value as Sneaker;

/* do stuff with Sneaker object */

}

The above sample searches for all items tagged "sneakers" from the Sports region of the Catalog cache.

The notion of regions and tagging is one place Velocity diverges from the simpler model of technologies like memcached and provides more functionality.

Eviction Policy and Explicit Object Expiration

Since memory is limited on a server, there has to be an eviction policy that ensures that the cache doesn't end up growing to big thus forcing the operating system to get all Virtual Memory on your ass by writing pages to disk. Once that happens you're in latency hell since fetching objects from the cache will involve going to disk to fetch them. Velocity gives you a couple of knobs that can be dialed up or down as needed to control how eviction or expiration of objects from the cache works. There is a file called ClusterConfig.xml which is used for configuring the eviction and expiration policy of each named cache instance. Below is an excerpt of the configuration file showing the policies for some named cache instances

<!-- Named cache list -->

<caches>

<cache name="default" type="partitioned">

<policy>

<eviction type="lru" />

<expiration isExpirable="false" />

</policy>

</cache>

<cache name="Inventory" type="partitioned">

<policy>

<eviction type="lru" />

<expiration isExpirable="true" defaultTTL="50" />

</policy>

</cache>

</caches>

The above excerpt indicates that the default and Inventory caches utilize a Least Recently Used algorithm for determining which objects are evicted from the cache. In addition, it specifies the default interval after which an object can be considered to be stale in the Inventory cache.

The default expiration interval can actually be overridden when putting an object in the cache by specifying a TTL parameter when calling the Put() method.

Concurrency Models: None, Optimistic, or Pessimistic

One of the first things you learn about distributed computing in the real world is that locks are bad mojo. In the way locks traditionally work, an object can be locked by a caller meaning everyone else interested in the object has to wait their turn. Although this prevents errors in your system occurring due to multiple callers interacting with the object at once, it also means there are built-in bottle necks in your system. So lesson #1 of scaling your service is often to get rid of as many locks in your code as possible. Eventually this leads to systems like eBay which doesn't use database transactions and Amazon's Dynamo which doesn't guarantee data consistency.

So what does this have to do with Velocity? Systems designed to scale massively like memcached don't support concurrency. This leads to developers asking questions like this one taken from the memcached mailing list

Consider this situation:-

- A list with numeric values: 1,2,3

- Server1: Gets the list from memcache.

- Server2: Also gets the list from memcache.

- Server1: Removes '1' from the list.

- Server2: Removes '3' from the list.

- Server1: Puts back a list with 2,3 in list in memcache.

- Server2: Puts back a list with 1,2 in list in memcache.

Note:Since, both servers have their instance of list objs.

This is not what we need to do. Becoz, both servers are putting an incorrect list in memcache.Ideally what shud have happened was that in the end a list with only '1' shud be put back in memcache. This problem occurs under load and happens in case of concurrent threads.

What I want is that memcache shud restrict Server2 and a consistent list shud be there in memcache. How do I handle such problem in memcache environment?? I know we can handle at application server end by doing all these operations through a centralized place(gets and puts), but how do I handle it in Memcache????

Any help wud be appreciated?

Unfortunately for the author of the question above, memcached doesn't provide APIs for concurrent access and enforcing data consistency (except for numeric counters). So far, the code samples I've shown for Velocity also do not support concurrency.

However there are APIs for fetching or putting objects that support optimistic and pessimistic concurrency models. In the optimistic concurrency model, instead of taking a lock, the objects are given a version number and the caller is expected to specify the version number of the object they have modified when putting it back in the cache. If the object has been modified since the time it was retrieved then there is a version mismatch error. At this point, the caller is expected to re-fetch the object and make their changes to the newly retrieved object before putting it back in the cache. Below is a code sample taken from the Velocity documentation that illustrates what this looks like in code

/* At time T0, cacheClientA and cacheClientB fetch the same object from the cache */

//-- cacheClientA pulls the FM radio inventory from cache

CacheFactory clientACacheFactory = new CacheFactory();

Cache cacheClientA = clientBCacheFactory.GetCache("catalog");

CacheItem radioInventory = cacheClientA.GetCacheItem("electronics", "RadioInventory");

//-- cacheClientB pulls the same FM radio inventory from cache

CacheFactory clientBCacheFactory = new CacheFactory();

Cache cacheClientB = clientBCacheFactory.GetCache("catalog");

CacheItem radioInventory = cacheClientA.GetCacheItem("electronics", "RadioInventory");

//-- At time T1, cacheClientA updates the FM radio inventory

int newRadioInventory = 155;

cacheClientA.Put("electronics", "RadioInventory", newRadioInventory,

radioInventory.Tags, radioInventory.Version);

//-- Later, at time T2, cacheClientB tries to update the FM radio inventory

// AN ERROR OCCURS HERE

int newRadioInventory = 130;

cacheClientB.Put("electronics", "RadioInventory", newRadioInventory,

radioInventory.Tags, radioInventory.Version);

In the pessimistic concurrency model, the caller specifically takes a lock by calling GetAndLock() with a lock time out. The lock is then held until the time out or until the object is put back using PutAndUnlock(). To prevent this from being a performance nightmare, the system does not block requests if a lock is held on an object they want to manipulate. Instead the request is rejected (i.e. it fails).

Update: Some people have commented here and elsewhere that memcached actually does support the optimistic concurrency model using the gets and cas commands. Sorry about that, it wasn't exposed in the memcached libraries I've looked at.

Final Thoughts

From my perspective, this is a welcome addition to the WISC developer's toolkit. I also like that it pushes the expectations of developers on what they should expect from a distributed object cache which I expect will end up being good for the industry overall and not just developers on Microsoft's platforms.

If the above sounds interesting, there is already a technology preview available for download from MSDN here. I've downloaded it but haven't tried to run it yet since I don't have enough machines to test it in the ways I would find interesting. As you can expect there is a Velocity team blog. Subscribed.

Now Playing: 50 Cent - These N*ggas Ain't Hood (feat. Lloyd Banks & Marquis)