A few weeks ago Dalton Caldwell, founder of imeem and Picplz, wrote a well received blog post titled What Twitter could have been where he laments the fact that Twitter hasn’t fulfilled some of the early promise developers saw in it as a platform. Specifically he writes

Perhaps you think that Twitter today is a really cool and powerful company. Well, it is. But that doesn’t mean that it couldn’t have been much, much more. I believe an API-centric Twitter could have enabled an ecosystem far more powerful than what Facebook is today. Perhaps you think that the API-centric model would have never worked, and that if the ad guys wouldn’t have won, Twitter would not be alive today. Maybe. But is the service we think of as Twitter today really the Twitter from a few years ago living up to its full potential? Did all of the man-hours of brilliant engineers, product people and designers, and hundreds of millions of VC dollars really turn into, well, this?

His blog post struck a chord with developers which made Dalton follow it up with another blog post titled announcing an audacious proposal as well as launching the join.app.net. Dalton’s proposal is as follows

I believe so deeply in the importance of having a financially sustainable realtime feed API & service that I am going to refocus App.net to become exactly that. I have the experience, vision, infrastructure and team to do it. Additionally, we already have much of this built: a polished native iOS app, a robust technical infrastructure currently capable of handing ~200MM API calls per day with no code changes, and a developer-facing API provisioning, documentation and analytics system. This isn’t vaporware.

To manifest this grand vision, we are officially launching a Kickstarter-esque campaign. We will only accept money for this financially sustainable, ad-free service if we hit what I believe is critical mass. I am defining minimum critical mass as $500,000, which is roughly equivalent to ~10,000 backers.

As can be expected as someone who’s worked on software as both an API client and platform provider of APIs, I have a few thoughts on this topic. So let’s start from the beginning.

The Promise of Twitter Annotations

About two years ago, Twitter CEO Dick Costolo wrote an expansive “state of the union” style blog post about the Twitter platform. Besides describing the state of the Twitter platform at the time it also set forth the direction the platform intended to go in and made a set of promises about how Twitter saw its role relative to its developer ecosystem. Dick wrote

To foster this real-time open information platform, we provide a short-format publish/subscribe network and access points to that network such as www.twitter.com, m.twitter.com and several Twitter-branded mobile clients for iPhone, BlackBerry, and Android devices. We also provide a complete API into the functions of the network so that others may create access points. We manage the integrity and relevance of the content in the network in the form of the timeline and we will continue to spend a great deal of time and money fostering user delight and satisfaction. Finally, we are responsible for the extensibility of the network to enable innovations that range from Annotations and Geo-Location to headers that can route support tickets for companies. There are over 100,000 applications leveraging the Twitter API, and we expect that to grow significantly with the expansion of the platform via Annotations in the coming months.

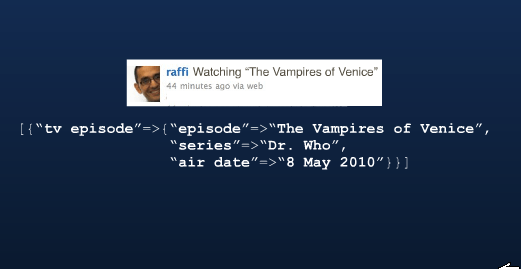

There was a lot of excitement in the industry about Twitter Annotations both from developers as well as the usual suspects in the tech press like TechCrunch and ReadWriteWeb. Blog posts such as How Twitter Annotations Could Bring the Real-Time and Semantic Web Together show how excited some developers were by the possibilities presented by the technology. For those who aren’t familiar with the concept, annotations were supposed to be the way to attach arbitrary metadata to a tweet beyond the well-known 140 characters. Below is a screenshot from a Twitter presentation showing this concept visually

Although it’s been two years since the Twitter Annotations was supposed to begin testing this feature has never been released nor has it talked about by the Twitter Platform team in recent months. Many believe that Twitter Annotations will never be released due to a changing of the guard within Twitter which is hinted at in Dalton Caldwell’s What Twitter could have been post

As I understand, a hugely divisive internal debate occurred among Twitter employees around this time. One camp wanted to build the entire business around their realtime API. In this scenario, Twitter would have turned into something like a realtime cloud API company. The other camp looked at Google’s advertising model for inspiration, and decided that building their own version of AdWords would be the right way to go.

As you likely already know, the advertising group won that battle, and many of the open API people left the company.

It is this seeming change in direction that Dalton has seized on to create join.app.net.

Why Twitter Annotations was a Difficult Promise to Make

I have no idea what went on within Twitter but as someone who has built both platforms and API clients the challenges in delivering a feature such as Twitter Annotations seem quite obvious to me. A big problem when delivering a platform as part of an end user facing service is that there are often trade offs one has to make at the expense of the other. Doing things that make end users happy may end up ticking off people who’ve built businesses on your platform (e.g. layoffs caused by Google Panda update, companies losing customers overnight after launch of Facebook's timeline, etc). On the other hand, doing things that make developers happy can create suboptimal user experiences such as the “openness” of Android as a platform making it a haven for mobile malware.

The challenge with Twitter Annotations is that it threw user experience consistency out of the Window. Imagine that the tweet shown in the image above was created by Dare’s Awesome Twitter App which allows users to “attach” a TV episode from a source like Hulu or Netflix into the app before they tweet. Now in Dare’s Awesome Twitter App, the user sees their tweet an an inline experience where they can consume the video inline similar to what Twitter calls Expanded Tweets today. However the user’s friends who are using the Twitter website or other Twitter apps just see 140 characters. You can imagine that apps would then compete on how many annotations they supported and creating new interesting annotations. This is effectively what happened with RSS and a variety of RSS extensions with no RSS reader supporting the full gamut of extensions. Heck, I supported more RSS extensions in RSS Bandit in 2009 than Google Reader does today.

This cacophony of Annotations would have meant that not only would there no longer be such a thing as a consistent Twitter experience but Twitter itself would be on an eternal treadmill of supporting various annotations as they were introduced into the ecosystem by various clients and content producers.

Secondly, the ability to put arbitrary machine readable content in tweets would have made Twitter an attractive mechanism as a “free” publish-subscribe infrastructure of all manner of apps and devices. Instead of building a push notification system to communicate with client apps or devices, an enterprising developer could just create a special Twitter account and have all the end points connect to that. The notion of Twitter controlled botnets and the rise of home appliances connected to Twitter are all indicative that there is some demand for this capability. If this content not intended for humans ever becomes a large chunk of the data flowing through Twitter, how to monetize it will be extremely challenging. Charging people for using Twitter in this way isn’t easy since it isn’t clear how you differentiate a 20,000 machine botnet from a moderately popular Internet celebrity with a lot of fans who mostly lurk on Twitter.

I have no idea if Twitter ever plans to ship Annotations but if they do I’d be very interested to see how they would solve the two problems mentioned above.

Challenges Facing App.net as a Consumer Service

Now that we’ve talked about Twitter Annotations, let’s look at what App.net plans to deliver to address the demand that has yet to be fulfilled by the promise of Twitter Annotations. From join.app.net we learn the product that is intended to be delivered is

OK, great, but what exactly is this product you will be delivering?

As a member, you'll have a new social graph and real-time feed that you access from an App.net mobile application or website. At first, the user experience will be very similar to what Twitter was like before it turned into a media company. On a forward basis, we will focus on expanding our core experience by nurturing a powerful ecosystem based on 3rd-party developer built "apps". This is why we think the name "App.net" is appropriate for this service.

From a developer perspective, you will be able to read and write to a Twitter-like API. Developers behaving in good faith will have free reign to build alternate UIs, new business models of their own, and whatever they can dream up.

As this project progresses, we will be collaborating with you, our backers, while sharing and iterating screenshots of the app, API documentation, and more. There are quite a few technical improvements to existing APIs that we would like to see in the App.net API. For example, longer message character limits, RSS feeds, and rich annotations support.

In short it intends to be a Twitter clone with a more expansive API policy than Twitter. The key problem facing App.net is that as a social graph based services it will suffer from the curse of network effects. Social apps need to cross a particular threshold of people on the service before they are useful and once they cross that threshold it often leads to a “winner-take-all” dynamic where similar sites with smaller users bleed users to the dominant service since everyone is on the dominant service. This is how Facebook killed MySpace & Bebo and Twitter killed Pownce & Jaiku. App.net will need to differentiate itself more than just being “open version of popular social network”. Services like Status.net and Diaspora have tried and failed to make a dent with that approach while fresh approaches to the same old social graph and news feed like Pinterest and Instagram have grown by leaps and bounds.

A bigger challenge is the implication that it wants to be a paid social network. As I mentioned earlier, social graph based services live and die by network effects. The more people that use them the more useful they get for their members. Charging money for an online service is an easy way to reduce your target audience by at least an order of magnitude (i.e. reduce your target audience by at least 90%). As an end user, I don’t see the value of joining a Twitter-clone populated by people who could afford $50 a year as opposed to joining a free service like Facebook, Twitter or even Google+.

Challenges Facing App.net as a Developer Platform

As a developer it isn’t clear to me what I’m expected to get out of App.net. The question on how they came up with their pricing tiers is answered thusly

Additionally, there are several comps of users being willing to pay roughly this amount for services that are deeply valuable, trustworthy and dependable. For instance, Dropbox charges $10 and up per month, Evernote charges $5 and up per month, Github charges $7 and up per month.

The developer price is inspired by the amount charged by the Apple Developer Program, $99. We think this demonstrates that developers are willing to pay for access to a high quality development platform.

I’ve already mentioned above that network effects are working against App.net as a popular consumer service. The thought of spending $50 a year to target less users than I can by building apps on Facebook or Twitter’s platforms doesn’t sound logical to me. The leaves using App.net as a low cost publish-subscribe mechanism for various apps or devices I deploy. This is potentially interesting but I’d need to get more data before I can tell just how interesting it could be. For example, there is a bunch of talk about claiming your Twitter handle and other things which makes it sound like developers only get a single account on the service. True developer friendliness for this type of service would include disclosure on how one could programmatically create and manage nodes in the social graph (aka accounts in the system).

At the end of the day although there is a lot of persuasive writing on join.app.net, there’s just not enough to explain why it will be a platform that will provide me enough value as a developer for me to part with my hard earned $50. The comparison to Apple’s developer program is rich though. Apple has given developers five billion reasons why paying them $99 a year is worth it, we haven’t gotten one good one from App.net.

That said, I wish Dalton luck on this project. Props to anyone who can pick himself up and continue to build new things after what he went through with imeem and PicPlz.

Now Playing: French Montana - Pop That (featuring Rick Ross, Lil Wayne and Drake)

Now Playing: French Montana - Pop That (featuring Rick Ross, Lil Wayne and Drake)